Modelling intertidal elevation using tidal data¶

Products used: s2_l2a

Prerequisites: For more information about the tidal modelling step of this analysis, refer to the Tidal modelling notebook

Keywords: data used; Sentinel-2, water; tide modelling, water; waterline extraction, band index; NDWI, data methods; compositing

Background¶

Intertidal environments support important ecological habitats (e.g. sandy beaches and shores, tidal flats and rocky shores and reefs), and provide many valuable benefits such as storm surge protection, carbon storage and natural resources for recreational and commercial use. However, intertidal zones are faced with increasing threats from coastal erosion, land reclamation (e.g. port construction), and sea level rise. Accurate elevation data describing the height and shape of the coastline is needed to help predict when and where these threats will have the greatest impact. However, this data is expensive and challenging to map across the entire intertidal zone of a continent the size of Africa.

Digital Earth Africa use case¶

The rise and fall of the tide can be used to reveal the three-dimensional shape of the coastline by mapping the boundary betweeen water and land across a range of known tides (e.g. from low tide to high tide). Assuming that the land-water boundary is a line of constant height relative to mean sea level (MSL), elevations can be modelled for the area of coastline located between the lowest and highest observed tide.

Imagery from satellites such as the Copernicus Sentinel-2 program is available for free for the entire planet, making satellite imagery a powerful and cost-effective tool for modelling the 3D shape and structure of the intertidal zone at regional or national scale.

Reference: Bishop-Taylor et al. 2019, the first 3D model of Australia’s entire coastline: the National Intertidal Digital Elevation Model.

Description¶

In this example, we demonstrate a simplified version of the National Intertidal Digital Elevation Model (NIDEM) method that combines data from Sentinel-2 satellite with tidal modelling, image compositing and spatial interpolation techniques. We first map the boundary between land and water from low to high tide, and use this information to generate smooth, continuous 3D elevation maps of the intertidal zone. The resulting data may assist in mapping the habitats of threatened coastal species, identifying areas of coastal erosion, planning for extreme events such as storm surges and flooding, and improving models of how sea level rise will affect the coastline. This worked example takes users through the code required to:

Load in a cloud-free Sentinel-2 time series.

Compute a water index (NDWI).

Tag and sort satellite images by tide height.

Create “summary” or composite images that show the distribution of land and water at discrete intervals of the tidal range (e.g. at low tide, high tide).

Extract and visualise the topography of the intertidal zone as depth contours.

Interpolate depth contours into a smooth, continuous Digital Elevation Model (DEM) of the intertidal zone.

Getting started¶

To run this analysis, run all the cells in the notebook, starting with the “Load packages” cell.

After finishing the analysis, return to the “Analysis parameters” cell, modify some values (e.g. choose a different location or time period to analyse) and re-run the analysis. There are additional instructions on modifying the notebook at the end.

Load packages¶

Load key Python packages and supporting functions for the analysis.

[1]:

pip install -q git+https://github.com/digitalearthafrica/deafrica-sandbox-notebooks.git@coastlines_s2s1#subdirectory=Tools

Note: you may need to restart the kernel to use updated packages.

[2]:

%matplotlib inline

import datacube

import xarray as xr

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from datacube.utils.cog import write_cog

from deafrica_tools.datahandling import load_ard, mostcommon_crs

from deafrica_tools.bandindices import calculate_indices

from deafrica_tools.coastal import tidal_tag

from deafrica_tools.spatial import subpixel_contours, interpolate_2d, contours_to_arrays

from deafrica_tools.plotting import display_map, map_shapefile, rgb

from deafrica_tools.dask import create_local_dask_cluster

/usr/local/lib/python3.10/dist-packages/geopandas/_compat.py:124: UserWarning: The Shapely GEOS version (3.11.1-CAPI-1.17.1) is incompatible with the GEOS version PyGEOS was compiled with (3.10.4-CAPI-1.16.2). Conversions between both will be slow.

warnings.warn(

Set up a Dask cluster¶

Dask can be used to better manage memory use down and conduct the analysis in parallel. For an introduction to using Dask with Digital Earth Africa, see the Dask notebook.

Note: We recommend opening the Dask processing window to view the different computations that are being executed; to do this, see the Dask dashboard in DE Africa section of the Dask notebook.

To use Dask, set up the local computing cluster using the cell below.

[3]:

create_local_dask_cluster()

Client

Client-184fa6f9-1728-11ee-8bd3-26e3c91630bf

| Connection method: Cluster object | Cluster type: distributed.LocalCluster |

| Dashboard: /user/whusggliuqx@gmail.com/proxy/8787/status |

Cluster Info

LocalCluster

16785895

| Dashboard: /user/whusggliuqx@gmail.com/proxy/8787/status | Workers: 1 |

| Total threads: 4 | Total memory: 26.21 GiB |

| Status: running | Using processes: True |

Scheduler Info

Scheduler

Scheduler-4966e58c-847c-45b0-bace-defc4f24c388

| Comm: tcp://127.0.0.1:35703 | Workers: 1 |

| Dashboard: /user/whusggliuqx@gmail.com/proxy/8787/status | Total threads: 4 |

| Started: Just now | Total memory: 26.21 GiB |

Workers

Worker: 0

| Comm: tcp://127.0.0.1:42047 | Total threads: 4 |

| Dashboard: /user/whusggliuqx@gmail.com/proxy/37919/status | Memory: 26.21 GiB |

| Nanny: tcp://127.0.0.1:38013 | |

| Local directory: /tmp/dask-worker-space/worker-__cvifnz | |

Connect to the datacube¶

Activate the datacube database, which provides functionality for loading and displaying stored Earth observation data.

[4]:

dc = datacube.Datacube(app='Intertidal_elevation_Sentinel-2')

Analysis parameters¶

The following cell set important parameters for the analysis:

lat: The central latitude to analyse (e.g.-11.384).lon: The central longitude to analyse (e.g.43.287).buffer: The number of square degrees to load around the central latitude and longitude. For reasonable loading times, set this as0.1or lower.time_range: The date range to analyse (e.g.('2017', '2022'))

If running the notebook for the first time, keep the default settings below. This will demonstrate how the analysis works and provide meaningful results. The example generates an intertidal elevation model for an area off the coast of Guinea Bissau.

To ensure that the tidal modelling part of this analysis works correctly, please make sure the centre of the study area is located over water when setting lat and lon.

[5]:

# Define the area of interest

lat =-19.25612

lon= 44.32470

buffer = 0.04

# Combine central lat,lon with buffer to get area of interest

lat_range = (lat-buffer, lat+buffer)

lon_range = (lon-buffer, lon+buffer)

# Set the range of dates for the analysis

time_range = ('2017', '2022')

View the selected location¶

The next cell will display the selected area on an interactive map. Feel free to zoom in and out to get a better understanding of the area you’ll be analysing. Clicking on any point of the map will reveal the latitude and longitude coordinates of that point.

[6]:

display_map(x=lon_range, y=lat_range)

/home/jovyan/.local/lib/python3.10/site-packages/deafrica_tools/plotting.py:284: FutureWarning: This function is deprecated. See: https://pyproj4.github.io/pyproj/stable/gotchas.html#upgrading-to-pyproj-2-from-pyproj-1

all_longitude, all_latitude = transform(Proj(crs), Proj("EPSG:4326"), all_x, all_y)

[6]:

Load cloud-masked Sentinel-2 data¶

The first step in this analysis is to load in Sentinel-2 data for the lat_range, lon_range and time_range we provided above. The code below uses the load_ard function to load in Sentinel-2 analysis-ready data for the area and time specified. For more infmation, see the Using load_ard notebook. The function will also automatically mask out clouds from the dataset, allowing us to focus on pixels that contain useful data:

[7]:

# Create the 'query' dictionary object, which contains the longitudes,

# latitudes and time provided above

query = {

'x': lon_range,

'y': lat_range,

'time': time_range,

'measurements': ['red', 'green', 'blue', 'nir'],

'resolution': (-10, 10),

}

# Identify the most common projection system in the input query

output_crs = mostcommon_crs(dc=dc, product='s2_l2a', query=query)

# Load available data

s2_ds = load_ard(dc=dc,

products=['s2_l2a'],

output_crs=output_crs,

min_gooddata=0.5,

group_by='solar_day',

dask_chunks={},

**query)

/home/jovyan/.local/lib/python3.10/site-packages/deafrica_tools/datahandling.py:244: UserWarning: Setting 'min_gooddata' percentage to > 0.0 will cause dask arrays to compute when loading pixel-quality data to calculate 'good pixel' percentage. This can slow the return of your dataset.

warnings.warn(

Using pixel quality parameters for Sentinel 2

Finding datasets

s2_l2a

Counting good quality pixels for each time step

/usr/local/lib/python3.10/dist-packages/rasterio/warp.py:344: NotGeoreferencedWarning: Dataset has no geotransform, gcps, or rpcs. The identity matrix will be returned.

_reproject(

Filtering to 644 out of 819 time steps with at least 50.0% good quality pixels

Applying pixel quality/cloud mask

Returning 644 time steps as a dask array

Once the load is complete, examine the data by printing it in the next cell. The Dimensions argument revels the number of time steps in the data set, as well as the number of pixels in the x (longitude) and y (latitude) dimensions.

[8]:

print(s2_ds)

<xarray.Dataset>

Dimensions: (time: 644, y: 889, x: 845)

Coordinates:

* time (time) datetime64[ns] 2017-01-07T07:23:27 ... 2022-12-27T07:...

* y (y) float64 7.875e+06 7.875e+06 ... 7.866e+06 7.866e+06

* x (x) float64 4.248e+05 4.248e+05 ... 4.332e+05 4.333e+05

spatial_ref int32 32738

Data variables:

red (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

green (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

blue (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

nir (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

Attributes:

crs: epsg:32738

grid_mapping: spatial_ref

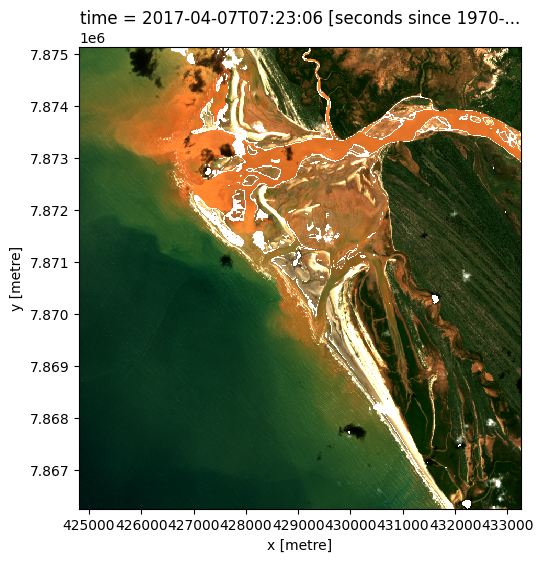

Plot example timestep in true colour¶

To visualise the data, use the pre-loaded rgb utility function to plot a true colour image for a given time-step. White areas indicate where clouds or other invalid pixels in the image have been masked.

Change the value for timestep and re-run the cell to plot a different timestep (timesteps are numbered from 0 to n_time - 1 where n_time is the total number of timesteps; see the time listing under the Dimensions category in the dataset print-out above).

[9]:

# Set the timesteps to visualise

timestep = 12

# Generate RGB plots at each timestep

rgb(s2_ds, index=timestep)

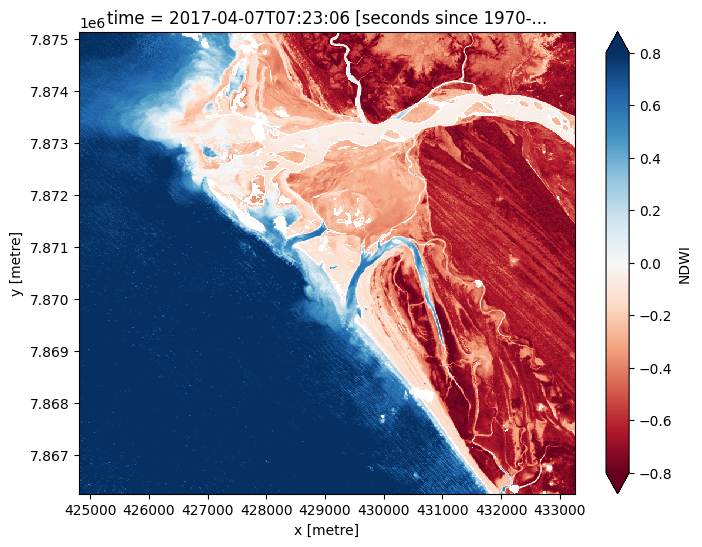

Compute Normalised Difference Water Index¶

To extract intertidal depth contours, we need to be able to seperate water from land in our study area. To do this, we can use our Sentinel-2 data to calculate a water index called the Normalised Difference Water Index, or NDWI. This index uses the ratio of green and near-infrared radiation to identify the presence of water. The formula is:

where Green is the green band and NIR is the near-infrared band.

When it comes to interpreting the index, High values (greater than 0, blue colours) typically represent water pixels, while low values (less than 0, red colours) represent land. You can use the cell below to calculate and plot one of the images after calculating the index.

[10]:

# Calculate the water index

s2_ds = calculate_indices(s2_ds, index='NDWI',

satellite_mission ='s2')

# Plot the resulting image for the same timestep selected above

s2_ds.NDWI.isel(time=timestep).plot(cmap='RdBu',

size=6,

vmin=-0.8,

vmax=0.8)

plt.show()

How does the plot of the index compare to the optical image from earlier? Was there water or land anywhere you weren’t expecting?

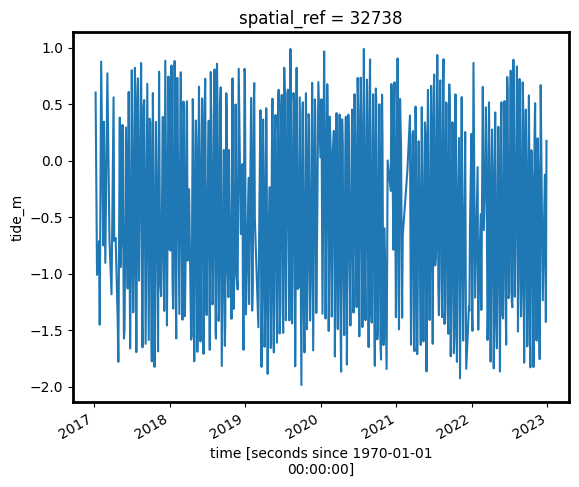

Model tide heights¶

The location of the shoreline can vary greatly from low to high tide. In the code below, we aim to calculate the height of the tide at the exact moment each Sentinel-2 image was acquired. This will allow us to built a sorted time series of images taken at low tide to high tide, which we will use to generate the intertidal elevation model.

The tidal_tag function below uses the OTPS TPXO8 tidal model to calculate the height of the tide at the exact moment each satellite image in our dataset was taken, and adds this as a new tide_m attribute in our dataset (for more information about this function, refer to the Tidal modelling notebook).

Note: this function can only model tides correctly if the centre of your study area is located over water. If this isn’t the case, you can specify a custom tide modelling location by passing a coordinate to

tidepost_latandtidepost_lon(e.g.tidepost_lat=11.228, tidepost_lon=-15.860).

[11]:

# Calculate tides for each timestep in the satellite dataset

s2_ds = tidal_tag(ds=s2_ds, tidepost_lat=None, tidepost_lon=None)

# Print the output dataset with new `tide_m` variable

print(s2_ds)

Setting tide modelling location from dataset centroid: 44.32, -19.26

Modelling tides using FES2014 tidal model

<xarray.Dataset>

Dimensions: (time: 644, y: 889, x: 845)

Coordinates:

* time (time) datetime64[ns] 2017-01-07T07:23:27 ... 2022-12-27T07:...

* y (y) float64 7.875e+06 7.875e+06 ... 7.866e+06 7.866e+06

* x (x) float64 4.248e+05 4.248e+05 ... 4.332e+05 4.333e+05

spatial_ref int32 32738

Data variables:

red (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

green (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

blue (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

nir (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

NDWI (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

tide_m (time) float64 0.6043 -1.009 -0.7102 ... -0.1226 -1.426 0.1767

Attributes:

crs: epsg:32738

grid_mapping: spatial_ref

[12]:

# Plot the resulting tide heights for each Sentinel-2 image:

s2_ds.tide_m.plot()

plt.show()

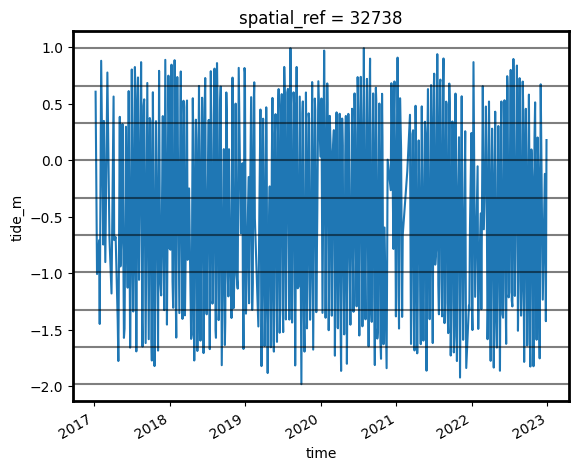

Create water index summary images from low to high tide¶

Using these tide heights, we can sort our Sentinel-2 dataset by tide height to reveal which parts of the landscape are inundated or exposed from low to high tide.

Individual remote sensing images can be affected by noise, including clouds, sunglint and poor water quality conditions (e.g. sediment). To produce cleaner images that can be compared more easily between tidal stages, we can create ‘summary’ images or composites that combine multiple images into one image to reveal the ‘typical’ or median appearance of the landscape at different tidal stages. In this case, we use the median as the summary statistic because it prevents strong outliers (like stray clouds) from skewing the data, which would not be the case if we were to use the mean.

In the code below, we take the time series of images, sort by tide and categorise each image into 9 discrete tidal intervals, ranging from the lowest (tidal interval 1) to the highest tides observed by Sentinel-2 (tidal interval 9). For more information on this method, refer to Sagar et al. 2018.

[13]:

# Sort every image by tide height

s2_ds = s2_ds.sortby('tide_m')

# Bin tide heights into 9 tidal intervals from low (1) to high tide (9)

binInterval = np.linspace(s2_ds.tide_m.min(),

s2_ds.tide_m.max(),

num=10)

tide_intervals = pd.cut(s2_ds.tide_m,

bins=binInterval,

labels=range(1, 10),

include_lowest=True)

# Add interval to dataset

s2_ds['tide_interval'] = xr.DataArray(tide_intervals,

[('time', s2_ds.time.data)])

print(s2_ds)

<xarray.Dataset>

Dimensions: (time: 644, y: 889, x: 845)

Coordinates:

* time (time) datetime64[ns] 2019-09-29T07:25:07 ... 2020-07-27T0...

* y (y) float64 7.875e+06 7.875e+06 ... 7.866e+06 7.866e+06

* x (x) float64 4.248e+05 4.248e+05 ... 4.332e+05 4.333e+05

spatial_ref int32 32738

Data variables:

red (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

green (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

blue (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

nir (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

NDWI (time, y, x) float32 dask.array<chunksize=(1, 889, 845), meta=np.ndarray>

tide_m (time) float64 -1.984 -1.925 -1.885 ... 0.9684 0.9894 0.9896

tide_interval (time) int64 1 1 1 1 1 1 1 1 1 1 1 ... 9 9 9 9 9 9 9 9 9 9 9

Attributes:

crs: epsg:32738

grid_mapping: spatial_ref

[14]:

s2_ds.sortby('time').tide_m.plot()

for i in binInterval: plt.axhline(i, c='black', alpha=0.5)

Now that we have a dataset where each image is classified into a discrete range of the tide, we can combine our images into a set of nine individual images that show where land and water is located from low to high tide. This step can take several minutes to process.

Note: We recommend opening the Dask processing window to view the different computations that are being executed; to do this, see the Dask dashboard in DE Africa section of the Dask notebook.

[ ]:

# For each interval, compute the median water index and tide height value

s2_intervals = (s2_ds[['tide_interval', 'NDWI', 'tide_m']]

.compute()

.groupby('tide_interval')

.median(dim='time'))

# Plot the resulting set of tidal intervals

s2_intervals.NDWI.plot(col='tide_interval', col_wrap=5, cmap='RdBu')

plt.show()

The plot above should make it clear how the shape and structure of the coastline changes significantly from low to high tide as low-lying tidal flats are quickly inundated by increasing water levels.

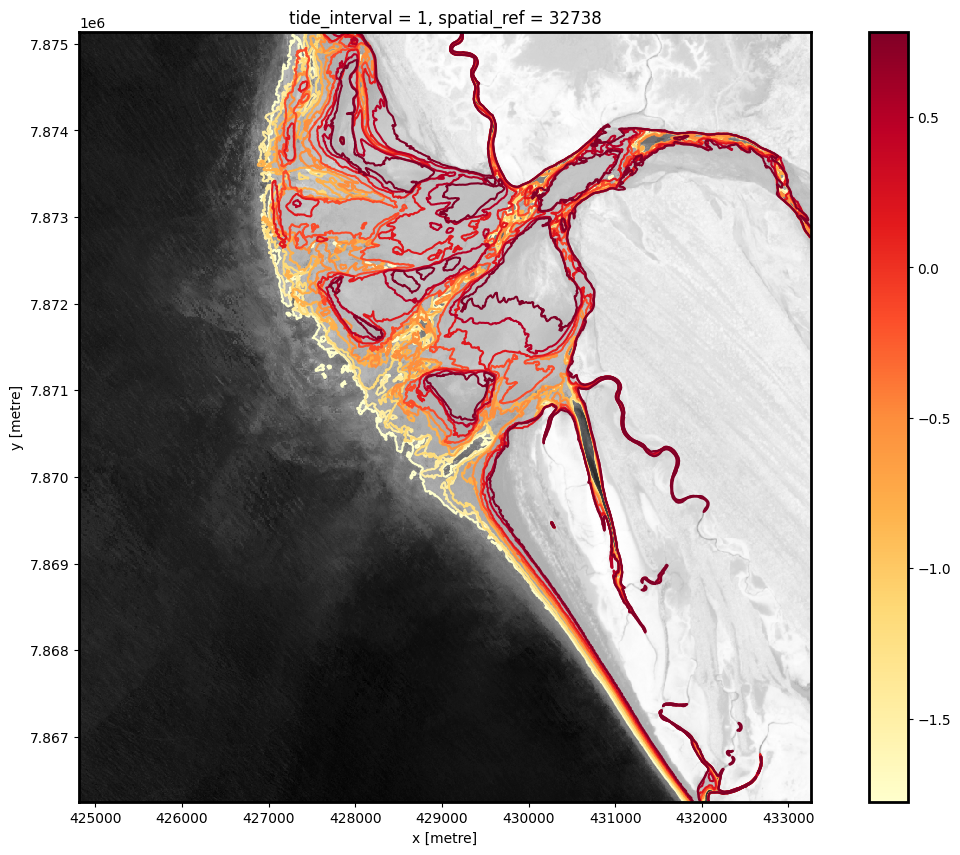

Extract depth contours from imagery¶

We now want to extract an accurate boundary between land and water for each of the tidal intervals above. The code below identifies the depth contours based on the boundary between land and water by tracing a line along pixels with a water index value of 0 (the boundary between land and water water index values). It returns a geopandas.GeoDataFrame with one depth contour for each tidal interval that is labelled with tide heights in metres relative to Mean Sea Level.

[16]:

# Set up attributes to assign to each waterline

attribute_df = pd.DataFrame({'tide_m': s2_intervals.tide_m.values})

# Extract waterlines

contours_gdf = subpixel_contours(da=s2_intervals.NDWI,

z_values=0,

crs=s2_ds.geobox.crs,

attribute_df=attribute_df,

min_vertices=20,

dim='tide_interval')

# Plot output shapefile over the top of the first tidal interval water index

fig, ax = plt.subplots(1, 1, figsize=(15, 10))

s2_intervals.NDWI.sel(tide_interval=1).plot(ax=ax,

cmap='Greys',

add_colorbar=False)

contours_gdf.plot(ax=ax, column='tide_m', cmap='YlOrRd', legend=True)

plt.show()

Operating in single z-value, multiple arrays mode

The above plot is a basic visualisation of the depth contours returned by the subpixel_contours function. Deeper contours (in m relative to Mean Sea Level) are coloured in yellow; more shallow contours are coloured in red. Now have the shapefile, we can use a more complex function to make an interactive plot for viewing the topography of the intertidal zone.

Plot interactive map of depth contours coloured by time¶

The next cell provides an interactive map with an overlay of the depth contours identified in the previous cell. Run it to view the map.

Zoom in to the map below to explore the resulting set of depth contours. Using this data, we can easily identify areas of the coastline which are only exposed in the lowest of tides, or other areas that are only covered by water during high tides.

[ ]:

map_shapefile(gdf=contours_gdf, attribute='tide_m', continuous=True)

While the contours above provide valuable information about the topography of the intertidal zone, we can extract additional information about the 3D structure of the coastline by converting them into an elevation raster (i.e. a Digital Elevation Model or DEM).

In the cell below, we convert the shapefile above into an array of points with X, Y and Z coordinates, where the Z coordinate is the point’s elevation relative to Mean Sea Level. We then use these XYZ points to interpolate smooth, continuous elevations across the intertidal zone using linear interpolation.

[ ]:

# First convert our contours shapefile into an array of XYZ points

xyz_array = contours_to_arrays(contours_gdf, 'tide_m')

# Interpolate these XYZ points over the spatial extent of the Sentinel-2 dataset

intertidal_dem = interpolate_2d(ds=s2_intervals,

x_coords=xyz_array[:, 0],

y_coords=xyz_array[:, 1],

z_coords=xyz_array[:, 2])

# Plot the output

intertidal_dem.plot(cmap='viridis', size=8, robust=True)

plt.show()

You can see in the output above that our interpolation results are very messy. This is because the interpolation extends across areas of our study area that are not affected by tides (e.g. areas of water located beyond the lowest observed tide, and on land). To clean up the data, we can restrict the DEM to only the area between the lowest and highest observed tides:

[ ]:

# Identify areas that are always wet (e.g. below low tide), or always dry

above_lowest = s2_intervals.isel(tide_interval=0).NDWI < 0

below_highest = s2_intervals.isel(tide_interval=-1).NDWI > 0

# Keep only pixels between high and low tide

intertidal_dem_clean = intertidal_dem.where(above_lowest & below_highest)

# Plot the cleaned dataset

intertidal_dem_clean.plot(cmap='viridis', size=8, robust=True)

plt.show()

Export intertidal DEM as a GeoTIFF¶

As a final step, we can take the intertidal DEM we created and export it as a GeoTIFF that can be loaded in GIS software like QGIS or ArcMap (to download the dataset from the Sandbox, locate it in the file browser to the left, right click on the file, and select “Download”).

[ ]:

# Export as a GeoTIFF

write_cog(intertidal_dem_clean,

fname='intertidal_dem_s2.tif',

overwrite=True)

Next steps¶

When you are done, return to the “Set up analysis” cell, modify some values (e.g. time_range, lat and lon) and rerun the analysis.

If you’re going to change the location, you’ll need to make sure Sentinel-2 data is available for the new location, which you can check at the DEAfrica Explorer.

National Intertidal Digital Elevation Model¶

For more information about the science behind this notebook, please refer to the scientific article outlining the application of this approach to the entire Australian coastline: Bishop-Taylor et al. 2019 Between the tides: Modelling the elevation of Australia’s exposed intertidal zone at continental scale.

Additional information¶

License: The code in this notebook is licensed under the Apache License, Version 2.0. Digital Earth Africa data is licensed under the Creative Commons by Attribution 4.0 license.

Contact: If you need assistance, please post a question on the Open Data Cube Slack channel or on the GIS Stack Exchange using the open-data-cube tag (you can view previously asked questions here). If you would like to report an issue with this notebook, you can file one on

Github.

Compatible datacube version:

[ ]:

print(datacube.__version__)

[ ]:

from datetime import datetime

datetime.today().strftime('%Y-%m-%d')

[ ]: