Vegetation Phenology¶

Keywords data used; sentinel-2, data used; crop_mask, band index; NDVI, band index; EVI, phenology, analysis; time series

Background¶

Phenology is the study of plant and animal life cycles in the context of the seasons. It can be useful in understanding the life cycle trends of crops and how the growing seasons are affected by changes in climate. For more information, see the USGS page on deriving phenology from NDVI time-series.

Description¶

This notebook demonstrates how to calculate vegetation phenology statistics using the DE Africa function xr_phenology. To detect changes in plant life for Sentinel-2, the script uses either the Normalized Difference Vegetation Index (NDVI) or the Enhanced Vegetation Index (EVI), which are common proxies for vegetation growth and health.

The outputs of this notebook can be used to assess spatio-temporal differences in the growing seasons of agriculture fields or native vegetation.

This notebook demonstrates the following steps:

Load cloud-masked Sentinel 2 data for an area of interest.

Calculate a vegetation proxy index (NDVI or EVI).

Generate a zonal time series of vegetation health.

Complete and smooth the vegetation timeseries to remove gaps and noise.

Calculate phenology statistics on a simple 1D vegetation time series.

Calculate per-pixel phenology statistics.

Optional: Calculating generic temporal statistics usng the hdstats library.

Getting started¶

To run this analysis, run all the cells in the notebook, starting with the “Load packages” cell.

Load packages¶

Load key Python packages and supporting functions for the analysis.

[1]:

%matplotlib inline

# Force GeoPandas to use Shapely instead of PyGEOS

# In a future release, GeoPandas will switch to using Shapely by default.

import os

os.environ['USE_PYGEOS'] = '0'

import os

import datacube

import numpy as np

import pandas as pd

import geopandas as gpd

import xarray as xr

import datetime as dt

import matplotlib.pyplot as plt

from deafrica_tools.temporal import xr_phenology, temporal_statistics

from deafrica_tools.datahandling import load_ard

from deafrica_tools.bandindices import calculate_indices

from deafrica_tools.plotting import display_map, rgb

from datacube.utils.geometry import Geometry

from deafrica_tools.spatial import xr_rasterize

from deafrica_tools.areaofinterest import define_area

from datacube.utils.aws import configure_s3_access

configure_s3_access(aws_unsigned=True, cloud_defaults=True)

Connect to the datacube¶

Connect to the datacube so we can access DE Africa data. The app parameter is a unique name for the analysis which is based on the notebook file name.

[2]:

dc = datacube.Datacube(app='Vegetation_phenology')

Analysis parameters¶

The following cell sets important parameters for the analysis:

veg_proxy: Band index to use as a proxy for vegetation health e.g.'NDVI'or'EVI'.lat: The central latitude to analyse (e.g.-10.6996).lon: The central longitude to analyse (e.g.35.2708).buffer: The number of square degrees to load around the central latitude and longitude. For reasonable loading times, set this as0.1or lower.time_range: The year range to analyse (e.g.('2019-01', '2019-06')).

Select location¶

To define the area of interest, there are two methods available:

By specifying the latitude, longitude, and buffer. This method requires you to input the central latitude, central longitude, and the buffer value in square degrees around the center point you want to analyze. For example,

lat = 10.338,lon = -1.055, andbuffer = 0.1will select an area with a radius of 0.1 square degrees around the point with coordinates (10.338, -1.055).By uploading a polygon as a

GeoJSON or Esri Shapefile. If you choose this option, you will need to upload the geojson or ESRI shapefile into the Sandbox using Upload Files button in the top left corner of the Jupyter Notebook interface. ESRI shapefiles must be uploaded with all the related files

in the top left corner of the Jupyter Notebook interface. ESRI shapefiles must be uploaded with all the related files (.cpg, .dbf, .shp, .shx). Once uploaded, you can use the shapefile or geojson to define the area of interest. Remember to update the code to call the file you have uploaded.

To use one of these methods, you can uncomment the relevant line of code and comment out the other one. To comment out a line, add the "#" symbol before the code you want to comment out. By default, the first option which defines the location using latitude, longitude, and buffer is being used.

[3]:

# Set the vegetation proxy to use

veg_proxy = 'NDVI'

time_range = ('2019-01-01', '2020-12-20')

[4]:

# Method 1: Specify the latitude, longitude, and buffer

aoi = define_area(lat=8.7186, lon=40.8646, buffer=0.02)

# Method 2: Use a polygon as a GeoJSON or Esri Shapefile.

# aoi = define_area(vector_path='aoi.shp')

#Create a geopolygon and geodataframe of the area of interest

geopolygon = Geometry(aoi["features"][0]["geometry"], crs="epsg:4326")

geopolygon_gdf = gpd.GeoDataFrame(geometry=[geopolygon], crs=geopolygon.crs)

# Get the latitude and longitude range of the geopolygon

lat_range = (geopolygon_gdf.total_bounds[1], geopolygon_gdf.total_bounds[3])

lon_range = (geopolygon_gdf.total_bounds[0], geopolygon_gdf.total_bounds[2])

View the selected location¶

The next cell will display the selected area on an interactive map. Feel free to zoom in and out to get a better understanding of the area you’ll be analysing. Clicking on any point of the map will reveal the latitude and longitude coordinates of that point.

[5]:

display_map(x=lon_range, y=lat_range)

[5]:

Load cloud-masked Sentinel-2 data¶

The first step is to load Sentinel-2 data for the specified area of interest and time range. The load_ard function is used here to load data that has been masked for cloud, shadow and quality filters, making it ready for analysis.

[6]:

# Create a reusable query

query = {

'y': lat_range,

'x': lon_range,

'time': time_range,

'measurements': ['red', 'green', 'blue', 'nir'],

'resolution': (-20, 20),

'output_crs': 'epsg:6933',

'group_by':'solar_day'

}

# Load available data from Sentinel-2

ds = load_ard(

dc=dc,

products=['s2_l2a'],

mask_filters=[('opening', 3),('dilation', 3)],

**query,

)

print(ds)

Using pixel quality parameters for Sentinel 2

Finding datasets

s2_l2a

Applying morphological filters to pq mask [('opening', 3), ('dilation', 3)]

Applying pixel quality/cloud mask

Loading 137 time steps

/usr/local/lib/python3.10/dist-packages/rasterio/warp.py:344: NotGeoreferencedWarning: Dataset has no geotransform, gcps, or rpcs. The identity matrix will be returned.

_reproject(

<xarray.Dataset>

Dimensions: (time: 137, y: 253, x: 194)

Coordinates:

* time (time) datetime64[ns] 2019-01-01T07:47:31 ... 2020-12-16T07:...

* y (y) float64 1.111e+06 1.111e+06 ... 1.106e+06 1.106e+06

* x (x) float64 3.941e+06 3.941e+06 ... 3.945e+06 3.945e+06

spatial_ref int32 6933

Data variables:

red (time, y, x) float32 1.262e+03 1.076e+03 ... 1.007e+03 897.0

green (time, y, x) float32 933.0 833.0 737.0 ... 888.0 788.0 724.0

blue (time, y, x) float32 620.0 543.0 403.0 ... 541.0 481.0 437.0

nir (time, y, x) float32 2.596e+03 2.586e+03 ... 2.232e+03

Attributes:

crs: epsg:6933

grid_mapping: spatial_ref

Clip the datasets to the shape of the area of interest¶

A geopolygon represents the bounds and not the actual shape because it is designed to represent the extent of the geographic feature being mapped, rather than the exact shape. In other words, the geopolygon is used to define the outer boundary of the area of interest, rather than the internal features and characteristics.

Clipping the data to the exact shape of the area of interest is important because it helps ensure that the data being used is relevant to the specific study area of interest. While a geopolygon provides information about the boundary of the geographic feature being represented, it does not necessarily reflect the exact shape or extent of the area of interest.

[7]:

#Rasterise the area of interest polygon

aoi_raster = xr_rasterize(gdf=geopolygon_gdf, da=ds, crs=ds.crs)

#Mask the dataset to the rasterised area of interest

ds = ds.where(aoi_raster == 1)

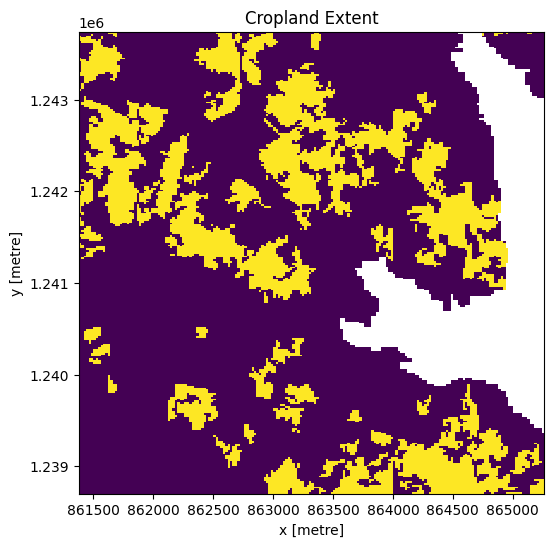

Mask region with DE Africa’s cropland extent map¶

Load the cropland mask over the region of interest.

[8]:

cm = dc.load(product='crop_mask',

time=('2019'),

measurements='filtered',

resampling='nearest',

like=ds.geobox).filtered.squeeze()

cm.where(cm<255).plot.imshow(add_colorbar=False, figsize=(6,6)) # we filter to <255 to omit missing data

plt.title('Cropland Extent');

Now we will use the cropland map to mask the regions in the Sentinel-2 data that only have cropping.

[9]:

#Filter out the no-data pixels (255) and non-crop pixels (0) from the cropland map and

#mask the Sentinel-2 data.

ds = ds.where(cm == 1)

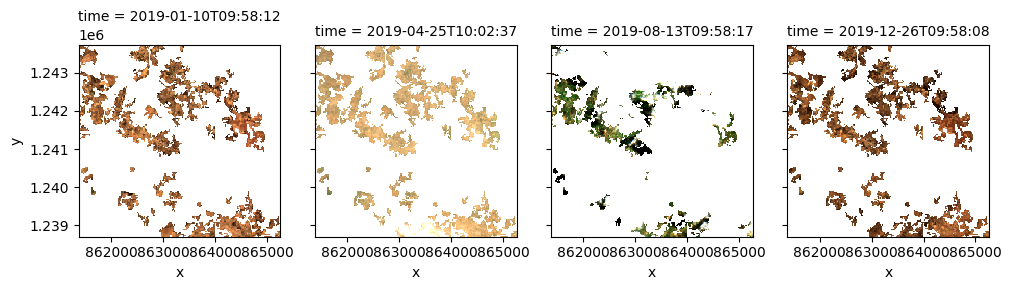

Once the load is complete, we can plot some of the images as as a true-colour image using the rgb function.

[10]:

rgb(ds, index=[1,22,44,70], col_wrap=4, size=3)

Compute band indices¶

This study measures the presence of vegetation through either the normalised difference vegetation index (NDVI) or the enhanced vegetation index (EVI). The index that will be used is dictated by the veg_proxy parameter that was set in the “Analysis parameters” section.

The normalised difference vegetation index (NDVI) requires the red and nir (near-infra red) bands. The formula is

The Enhanced Vegetation Index requires the red, nir and blue bands. The formula is

Both indices are available through the calculate_indices function, imported from deafrica_tools.bandindices. Here, we use satellite_mission='s2' since we’re working with Sentinel-2 data.

[11]:

# Calculate the chosen vegetation proxy index and add it to the loaded data set

ds = calculate_indices(ds, index=veg_proxy, satellite_mission='s2')

The vegetation proxy index should now appear as a data variable, along with the loaded measurements, in the ds object.

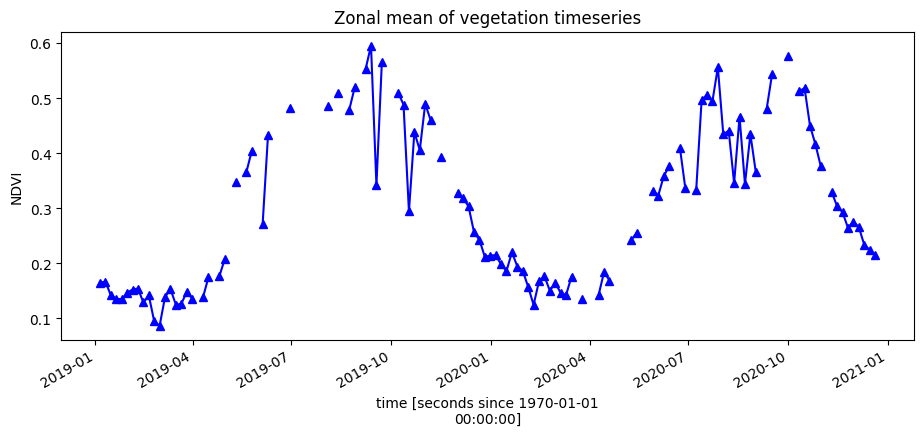

Plot the vegetation index over time¶

To get an idea of how the vegetation health changes throughout the year(s), we can plot a zonal time series over the region of interest. First we will do a simple plot of the zonal mean of the data.

[12]:

ds.NDVI.mean(['x', 'y']).plot.line('b-^', figsize=(11,4))

plt.title('Zonal mean of vegetation timeseries');

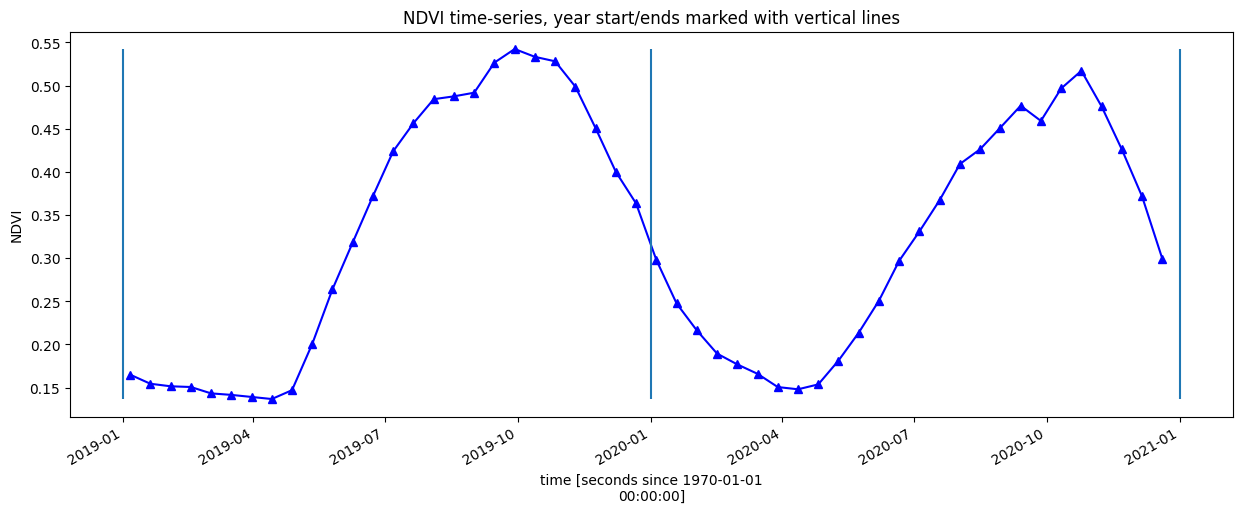

Smoothing/Interpolating vegetation time-series¶

Here, we will smooth and interpolate the data to ensure we working with a consistent time-series. This is a very important step in the workflow and there are many ways to smooth, interpolate, gap-fill, remove outliers, or curve-fit the data to ensure a useable time-series. If not using the default example, you may have to define additional methods to those used here.

To do this we take two steps:

Resample the data to fortnightly time-steps using the fortnightly median

Calculate a rolling mean with a window of 4 steps

[13]:

resample_period='2W'

window=4

veg_smooth=ds[veg_proxy].resample(time=resample_period).median().rolling(time=window, min_periods=1).mean()

Alternatively, lets complete the timeseries using the DE Africa function deafrica_temporal_statistics.fast_complete()

[14]:

veg_smooth_1D = veg_smooth.mean(['x', 'y'])

veg_smooth_1D.plot.line('b-^', figsize=(15,5))

_max=veg_smooth_1D.max()

_min=veg_smooth_1D.min()

plt.vlines(np.datetime64('2019-01-01'), ymin=_min, ymax=_max)

plt.vlines(np.datetime64('2020-01-01'), ymin=_min, ymax=_max)

plt.vlines(np.datetime64('2021-01-01'), ymin=_min, ymax=_max)

plt.title(veg_proxy+' time-series, year start/ends marked with vertical lines')

plt.ylabel(veg_proxy);

Calculate phenology statistics using xr_phenology¶

The DE Africa function xr_phenology can calculate a number of land-surface phenology statistics that together describe the characteristics of a plant’s lifecycle. The function can calculate the following statistics on either a zonal timeseries (like the one above), or on a per-pixel basis:

SOS = DOY of start of season

POS = DOY of peak of season

EOS = DOY of end of season

vSOS = Value at start of season

vPOS = Value at peak of season

vEOS = Value at end of season

Trough = Minimum value of season

LOS = Length of season (DOY)

AOS = Amplitude of season (in value units)

ROG = Rate of greening

ROS = Rate of senescence

where DOY = day-of-year (Jan 1st = 0, Dec 31st = 365). By default the function will return all the statistics as an xarray.Dataset, to return only a subset of these statistics pass a list of the desired statistics to the function e.g. stats=['SOS', 'EOS', 'ROG'].

The xr_phenology function also allows for interpolating and/or smoothing the time-series in the same way as we did above, the interpolating/smoothing will occur before the statistics are calculated.

See the deafrica_tools.temporal script for more information on each of the parameters in xr_phenology.

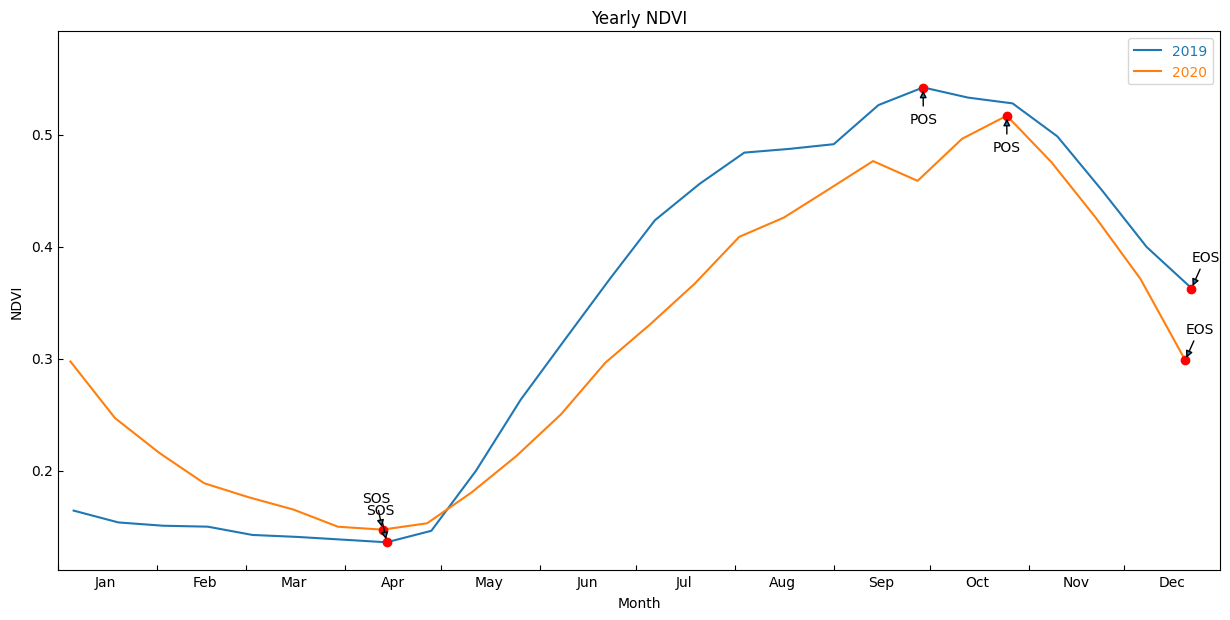

Zonal phenology statistics¶

To help us understand what these statistics refer too, lets first pass the simpler zonal mean (mean of all pixels in the image) time-series to the function and plot the results on the same curves as above.

First, provide a list of statistics to calculate with the parameter, pheno_stats.

method_sos : If ‘first’ then vSOS is estimated as the first positive slope on the greening side of the curve. If ‘median’, then vSOS is estimated as the median value of the postive slopes on the greening side of the curve.

method_eos : If ‘last’ then vEOS is estimated as the last negative slope on the senescing side of the curve. If ‘median’, then vEOS is estimated as the ‘median’ value of the negative slopes on the senescing side of the curve.

[15]:

basic_pheno_stats = ['SOS','vSOS','POS','vPOS','EOS','vEOS','Trough','LOS','AOS','ROG','ROS']

method_sos = 'first'

method_eos = 'last'

Plot the results with our statistcs annotated on the plot

[16]:

# find all the years to assist with plotting

years=veg_smooth_1D.groupby('time.year')

# get list of years in ts to help with looping

years_int=[y[0] for y in years]

#store results in dict

pheno_results = {}

#loop through years and calculate phenology

for year in years_int:

#select year

da = dict(years)[year]

#calculate stats

stats=xr_phenology(

da,

method_sos=method_sos,

method_eos=method_eos,

stats=basic_pheno_stats,

verbose=False

)

#add results to dict

pheno_results[str(year)] = stats

for key,value in pheno_results.items():

print('Year: ' +key)

for b in value.data_vars:

print(" "+b+": ", round(float(value[b].values),3))

Year: 2019

SOS: 104.0

vSOS: 0.193

POS: 314.0

vPOS: 0.602

EOS: 356.0

vEOS: 0.517

Trough: 0.193

LOS: 252.0

AOS: 0.409

ROG: 0.002

ROS: -0.002

Year: 2020

SOS: 117.0

vSOS: 0.249

POS: 285.0

vPOS: 0.614

EOS: 355.0

vEOS: 0.362

Trough: 0.231

LOS: 238.0

AOS: 0.383

ROG: 0.002

ROS: -0.004

Plot the results with our statistcs annotated on the plot

[17]:

# find all the years to assist with plotting

years=veg_smooth_1D.groupby('time.year')

fig, ax = plt.subplots()

fig.set_size_inches(15,7)

for year, y in zip(years, years_int):

#grab tall the values we need for plotting

eos = pheno_results[str(y)].EOS.values

sos = pheno_results[str(y)].SOS.values

pos = pheno_results[str(y)].POS.values

veos = pheno_results[str(y)].vEOS.values

vsos = pheno_results[str(y)].vSOS.values

vpos = pheno_results[str(y)].vPOS.values

#create plot

#the groupby and mean below doesn't actually do anything, except allow

#the plots to be on the same x-axis. i.e. veg-data isn't modified, just time units

year[1].groupby('time.dayofyear').mean().plot(ax=ax, label=year[0])

#add start of season

ax.plot(sos, vsos, 'or')

ax.annotate('SOS',

xy=(sos, vsos),

xytext=(-15, 20),

textcoords='offset points',

arrowprops=dict(arrowstyle='-|>'))

#add end of season

ax.plot(eos, veos, 'or')

ax.annotate('EOS',

xy=(eos, veos),

xytext=(0, 20),

textcoords='offset points',

arrowprops=dict(arrowstyle='-|>'))

#add peak of season

ax.plot(pos, vpos, 'or')

ax.annotate('POS',

xy=(pos, vpos),

xytext=(-10, -25),

textcoords='offset points',

arrowprops=dict(arrowstyle='-|>'))

ax.legend()

plt.ylim(_min-0.025,_max.values+0.05)

month_abbr=['Jan','Feb','Mar','Apr','May','Jun','Jul','Aug','Sep','Oct','Nov','Dec', '']

new_m=[]

for m in month_abbr:

new_m.append(' %s'%m) #Add spaces before the month name

plt.xticks(np.linspace(0,365,13), new_m, horizontalalignment='left')

plt.xlabel('Month')

plt.ylabel(veg_proxy)

plt.title('Yearly '+veg_proxy);

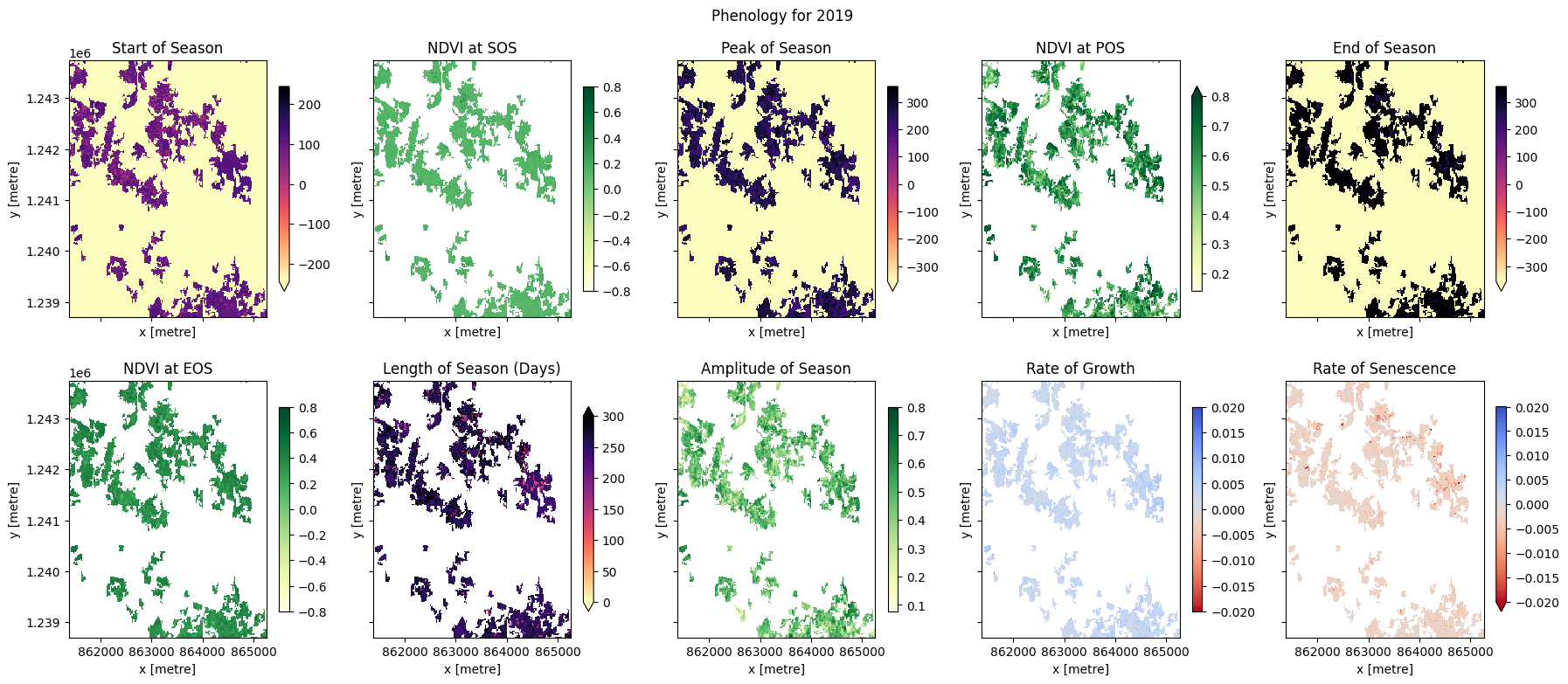

Per-pixel phenology statistics¶

We can now calculate the statistics for every pixel in our time-series and plot the results.

[18]:

# find all the years to assist with plotting

years=veg_smooth.groupby('time.year')

# get list of years in ts to help with looping

years_int=[y[0] for y in years]

#store results in dict

pheno_results = {}

#loop through years and calculate phenology

for year in years_int:

#select year

da = dict(years)[year]

#calculate stats

stats=xr_phenology(

da,

method_sos=method_sos,

method_eos=method_eos,

stats=basic_pheno_stats,

verbose=False

)

#add results to dict

pheno_results[str(year)] = stats

The phenology statistics have been calculated seperately for every pixel in the image. Let’s plot each of them to see the results.

Below, pick a year from the phenology results to plot.

[19]:

#Pick a year to plot

year_to_plot = '2019'

At the top if the plotting code we re-mask the phenology results with the crop-mask. This is because xr_phenologyhas methods for handling pixels with only NaNs (such as those regions outside of the polygon mask), so the results can have phenology results for regions outside the mask. We will therefore have to mask the data again.

[20]:

#select the year to plot

phen = pheno_results[year_to_plot]

#mask again with crop-mask

phen = phen.where(cm == 1)

# set up figure

fig, ax = plt.subplots(nrows=2,

ncols=5,

figsize=(18, 8),

sharex=True,

sharey=True)

# set colorbar size

cbar_size = 0.7

# set aspect ratios

for a in fig.axes:

a.set_aspect('equal')

# start of season

phen.SOS.plot(ax=ax[0, 0],

cmap='magma_r',

vmax=300,

vmin=0,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0, 0].set_title('Start of Season (DOY)')

phen.vSOS.plot(ax=ax[0, 1],

cmap='YlGn',

vmax=0.8,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0, 1].set_title(veg_proxy+' at SOS')

# peak of season

phen.POS.plot(ax=ax[0, 2],

cmap='magma_r',

vmax=300,

vmin=0,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0, 2].set_title('Peak of Season (DOY)')

phen.vPOS.plot(ax=ax[0, 3],

cmap='YlGn',

vmax=0.8,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0, 3].set_title(veg_proxy+' at POS')

# end of season

phen.EOS.plot(ax=ax[0, 4],

cmap='magma_r',

vmax=300,

vmin=0,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0, 4].set_title('End of Season (DOY)')

phen.vEOS.plot(ax=ax[1, 0],

cmap='YlGn',

vmax=0.8,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1, 0].set_title(veg_proxy+' at EOS')

# Length of Season

phen.LOS.plot(ax=ax[1, 1],

cmap='magma_r',

vmax=300,

vmin=0,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1, 1].set_title('Length of Season (DOY)')

# Amplitude

phen.AOS.plot(ax=ax[1, 2],

cmap='YlGn',

vmax=0.8,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1, 2].set_title('Amplitude of Season')

# rate of growth

phen.ROG.plot(ax=ax[1, 3],

cmap='coolwarm_r',

vmin=-0.02,

vmax=0.02,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1, 3].set_title('Rate of Growth')

# rate of Sensescence

phen.ROS.plot(ax=ax[1, 4],

cmap='coolwarm_r',

vmin=-0.02,

vmax=0.02,

cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1, 4].set_title('Rate of Senescence')

plt.suptitle('Phenology for '+year_to_plot)

plt.tight_layout();

Conclusions¶

In the example above, we can see these four fields are following the same cropping schedule and are therefore likely the same species of crop. We can also observe intra-field differences in the rates of growth, and in the NDVI values at different times of the season, which may be attributable to differences in soil quality, watering intensity, or other farming practices.

Phenology statistics are a powerful way to summarise the seasonal cycle of a plant’s life. Per-pixel plots of phenology can help us understand the timing of vegetation growth and sensecence across large areas and across diverse plant species as every pixel is treated as an independent series of observations. This could be important, for example, if we wanted to assess how the growing seasons are shifting as the climate warms.

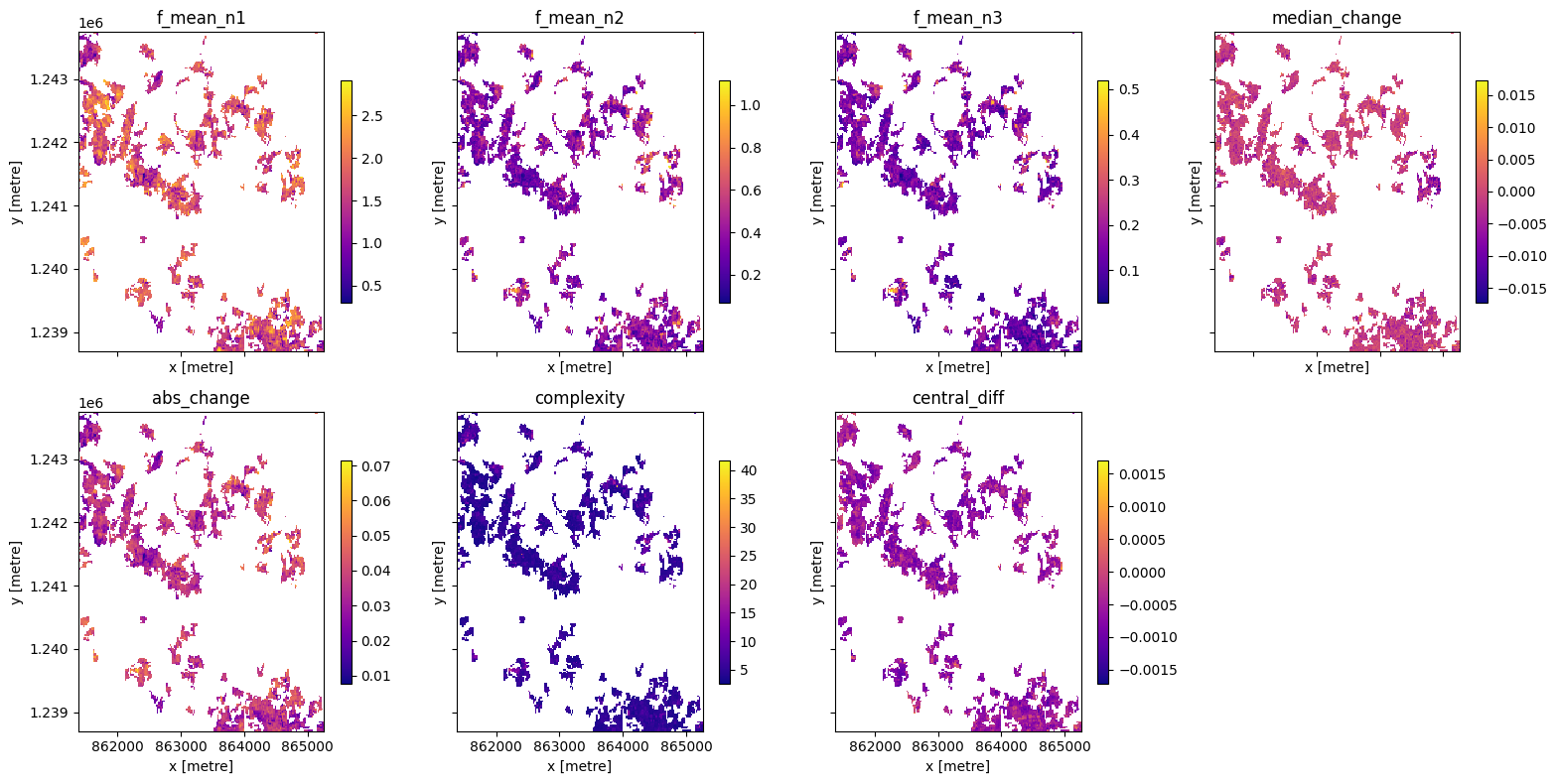

Advanced: Calculating generic temporal statistics¶

In addition to the xr_phenology function, the DE Africa deafrica_tools.temporal script contains another function for calculating generic time-series statistics, temporal_statistics. This function is built upon the hdstats library (a library of multivariate and high-dimensional statistics algorithms). This function accepts a 2 or 3D time-series of, for example, NDVI, and computes a number of summary

statistics including: - discordance - discrete fourier transform coefficients (mean, std, and median) - median change - absolute change - complexity - central difference - number of peaks (very slow to run)

Below we will calculate a number of these statistics and plot them.

[21]:

statistics = ['discordance',

'f_mean',

'median_change',

'abs_change',

'complexity',

'central_diff']

ts_stats = temporal_statistics(veg_smooth, statistics)

print(ts_stats)

Statistics:

discordance

f_mean

median_change

abs_change

complexity

central_diff

<xarray.Dataset>

Dimensions: (x: 194, y: 253)

Coordinates:

* x (x) float64 3.941e+06 3.941e+06 ... 3.945e+06 3.945e+06

* y (y) float64 1.111e+06 1.111e+06 ... 1.106e+06 1.106e+06

Data variables:

discordance (y, x) float32 nan nan nan nan nan ... nan nan nan nan nan

f_mean_n1 (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

f_mean_n2 (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

f_mean_n3 (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

median_change (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

abs_change (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

complexity (y, x) float32 nan nan nan nan nan ... nan nan nan nan nan

central_diff (y, x) float32 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0

[22]:

ts_stats = ts_stats.where(cm == 1)

# set up figure

fig, axes = plt.subplots(nrows=2,

ncols=4,

figsize=(16, 8),

sharex=True,

sharey=True)

# set colorbar size

cbar_size = 0.5

# set aspect ratios

for a in fig.axes:

a.set_aspect('equal')

# set colorbar size

cbar_size = 0.7

stats = list(ts_stats.data_vars)

#plot

for ax,s1,s2,s3,s4 in zip(axes,stats[0:2],stats[2:4],stats[4:6],stats[6:8]):

ts_stats[s1].plot(ax=ax[0],cmap='cividis',cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[0].set_title(s1)

ts_stats[s2].plot(ax=ax[1],cmap='plasma',cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[1].set_title(s2)

ts_stats[s3].plot(ax=ax[2],cmap='magma',cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[2].set_title(s1)

ts_stats[s4].plot(ax=ax[3],cmap='viridis',cbar_kwargs=dict(shrink=cbar_size, label=None))

ax[3].set_title(s2)

plt.tight_layout();

Next steps¶

When you’re done, if you wish to run this code for another region, return to the “Analysis parameters” cell, modify some values (e.g. time_range, or lat/lon) and rerun the analysis.

For advanced users, xr_phenology could be used for generating phenology feature layers in a machine learning classifier (see Machine Learning with ODC for example of running ML models with ODC data). xr_phenology can be passed inside of the custom_func parameter in the deafrica_classificationtools.collect_training_data() function, allowing phenological statistics to be computed during the collection of training data. An

example would look like this:

from deafrica_tools.temporal import xr_phenology

from deafrica_tools.classification import collect_training_data

def phenology_stats(da):

stats = xr_phenology(da, complete='fast_complete')

return stats

training = collect_training_data(...,

feature_func=phenology_stats)

Additional information¶

License: The code in this notebook is licensed under the Apache License, Version 2.0. Digital Earth Africa data is licensed under the Creative Commons by Attribution 4.0 license.

Contact: If you need assistance, please post a question on the Open Data Cube Slack channel or on the GIS Stack Exchange using the open-data-cube tag (you can view previously asked questions here). If you would like to report an issue with this notebook, you can file one on

Github.

Compatible datacube version:

[23]:

print(datacube.__version__)

1.8.15

Last Tested:

[24]:

from datetime import datetime

datetime.today().strftime('%Y-%m-%d')

[24]:

'2023-09-19'