Monitoring coastal erosion along Africa’s coastline¶

Contexte¶

Over 40% of the world’s population lives within 100 km of the coastline. However, coastal environments are constantly changing, with erosion and coastal change presenting a major challenge to valuable coastal infrastructure and important ecological habitats. Up-to-date data on coastal change and erosion is essential for coastal managers to be able to identify and minimise the impacts of coastal change and erosion.

Monitoring coastlines and rivers using field surveys can be challenging and hazardous, particularly at regional or national scale. Aerial photography and LiDAR can be used to monitor coastal change, but this is often expensive and requires many repeated flights over the same areas of coastline to build up an accurate history of how the coastline has changed across time.

Imagery from satellites such as the NASA/USGS Landsat, Copernicus Sentinel-1/2 constellations is available for free for the entire planet, making satellite imagery a powerful and cost-effective tool for monitoring coastlines and rivers at regional or national scale.

DE Africa use case¶

The usefulness of optical imagery such as Landsat and Sentinel-2 in the coastal zone can be affected by the presence of clouds, sun-glint over water, poor water quality (e.g. sediment), and the influence of tides. The effect of these factors can be reduced by combining individual noisy images into cleaner « summary » or composite layers, and filtering the data to focus only on images taken at certain tidal conditions (e.g. mid-tide). These clean, tidally-constrained composite images can then be used to identify and extract the precise boundary between water and land. This allows us to extract accurate shorelines that can be compared across time to reveal hotspots of erosion and coastal change.

Radar observations are largely unaffected by cloud cover. By developing a process to classify the observed pixels as either water or land, it is possible to identify the shoreline under all weather from the Sentinel-1 analysis ready backscatter data. Since radar measurement is sensitive to surface roughness, moisture content, and viewing geometry, the shoreline delineation can be affected by surface conditions such as breaking waves. Our temporal composite approach reduces noise caused by temporary conditions but shorelines mapped by radar may still be biased compared to shoreline identified in optical imagery. For example, smooth and flat beaches may be mistaken for water when they exhibit extremely low backscatter values.

Description¶

In this example, we use a simplified version of the DE Africa Coastlines method to combine time series data with image compositing and tide filtering techniques to accurately map shorelines across time, and identify changes. For a selected area of interest, data from Landsat, Sentinel-2 or Sentinel-1 are selected based on availability.

Following steps are demonstrated: 1. Query the satellite data and select best available product 2. Process the selected data and generate annual composite images for each year 3. Extract shorelines and calculate rates of coastal change

Getting started¶

To run this analysis, run all the cells in the notebook, starting with the « Load packages » cell.

After finishing the analysis, return to the « Analysis parameters » cell, modify some values (e.g. choose a different location or time period to analyse) and re-run the analysis. There are additional instructions on modifying the notebook at the end.

Load packages¶

First we need to install additional tools from the DE Africa Coastlines repository that will allow us to estimate rates of coastal change. > Note: If you run into any error messages in this analysis, try restarting the notebook by clicking Kernel, then Restart Kernel and Clear All Outputs.

[1]:

# pip install -q git+https://github.com/digitalearthafrica/deafrica-coastlines.git@S2_test --disable-pip-version-check

Now we can load key Python packages and supporting functions for the analysis.

[2]:

%matplotlib inline

import warnings

import datacube

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from skimage.filters import threshold_minimum

warnings.filterwarnings("ignore")

from coastlines.raster import load_tidal_subset, tide_cutoffs

from coastlines.vector import annual_movements, calculate_regressions, points_on_line

from deafrica_tools.bandindices import calculate_indices

from deafrica_tools.coastal import pixel_tides

from deafrica_tools.dask import create_local_dask_cluster

from deafrica_tools.datahandling import load_ard, load_best_available_ds, preprocess_s1

from deafrica_tools.plotting import display_map, rgb

from deafrica_tools.spatial import subpixel_contours

Set up a Dask cluster¶

Dask can be used to better manage memory use down and conduct the analysis in parallel. For an introduction to using Dask with Digital Earth Africa, see the Dask notebook.

Note: We recommend opening the Dask processing window to view the different computations that are being executed; to do this, see the Dask dashboard in DE Africa section of the Dask notebook.

To use Dask, set up the local computing cluster using the cell below.

[ ]:

client = create_local_dask_cluster(return_client=True)

Connect to the datacube¶

Activate the datacube database, which provides functionality for loading and displaying stored Earth observation data.

[4]:

dc = datacube.Datacube(app="Coastal_erosion_Landsat_Sentinel")

Analysis parameters¶

The following cell set important parameters for the analysis:

lat: The central latitude to analyse (e.g.14.283).lon: The central longitude to analyse (e.g.-16.921).buffer: The number of square degrees to load around the central latitude and longitude. For reasonable loading times, set this as0.1or lower.time_range: The date range to analyse (e.g.('2018', '2021'))time_step: This parameter allows us to choose the length of the time periods we want to compare: e.g. shorelines for each year, or shorelines for each six months etc.1Ywill generate one coastline for every year in the dataset;2Ywill produce a coastline for every two years, etc.filter_size: An integer number defining the size of the speckle filter window used for Sentinel-1. As we will use temporal composites, which will help remove speckle noise, we recommend setting this filter size as None to disable speckle filtering, or a very small value (e.g. 2) to avoid significant degradation of spatial resolution.s1_orbit_filtering: A boolean value defining whether to filter Sentinel-1 observations by satellite orbit. When this is set to True, a per-pixel filtering will be applied to keep only observations acquired in the orbit with higher frequency. This parameter is set to False by default, which means observations from both descending and ascending orbits will be returned.

If running the notebook for the first time, keep the default settings below. This will demonstrate how the analysis works and provide meaningful results. The example explores coastal change in Comoros.

To run the notebook for a different area, make sure at least one of Landsat, Sentinel-2 or Sentinel-1 data is available for the new location, which you can check at the DE Africa Explorer.

To ensure that the tidal modelling part of this analysis works correctly, please make sure that part of the study area is located over water when setting lat_range and lon_range.

[5]:

# Define the area of interest

lat = -12.27

lon = 43.726

# buffering distance around the central location defined by lat and lon

buffer = 0.04

# Combine central lat,lon with buffer to get area of interest

lat_range = (lat - buffer, lat + buffer)

lon_range = (lon - buffer, lon + buffer)

# Set the range of dates for the analysis, time step and tide range

time_range = ("2018", "2019")

time_step = "1Y"

# Speckle filtering size for Sentinel-1 data

filter_size = 2

# whether to apply orbit filtering

s1_orbit_filtering = True

View the selected location¶

The next cell will display the selected area on an interactive map. Feel free to zoom in and out to get a better understanding of the area you’ll be analysing. Clicking on any point of the map will reveal the latitude and longitude coordinates of that point.

[6]:

display_map(x=lon_range, y=lat_range)

[6]:

Query to find the best available product¶

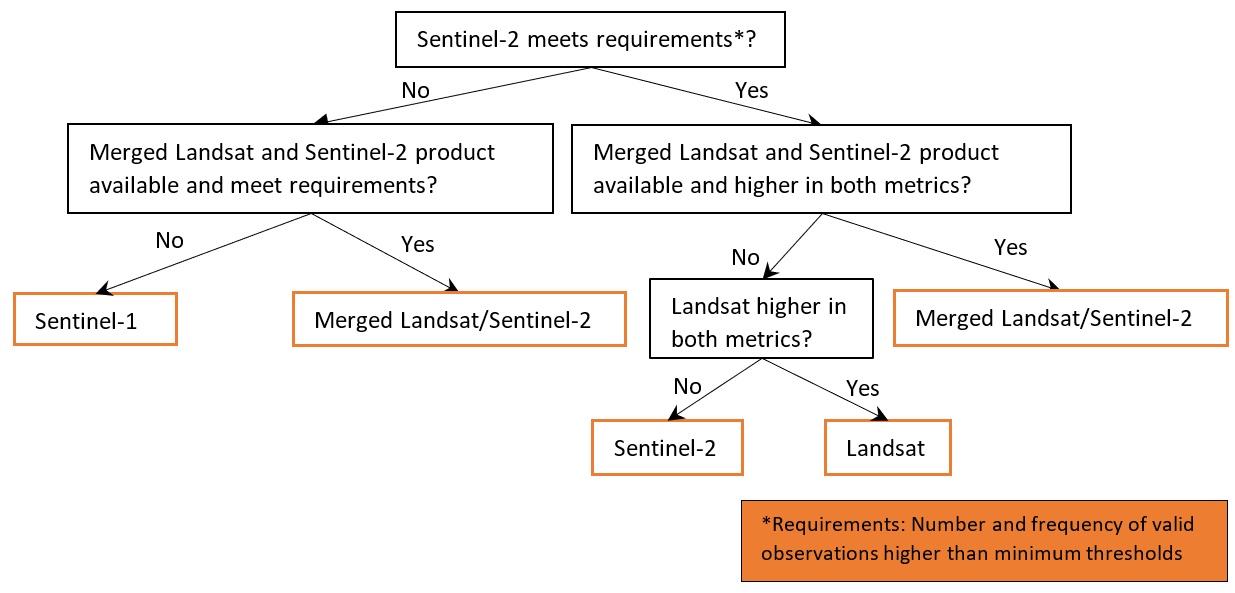

load_best_available_ds to select and load the data. The function implements a few steps: 1. Query all three products using the lat_range, lon_range and time_range specified above and the load_ard

function. The load_ard function will load and automatically mask out clouds from the dataset, allowing us to focus on pixels that contain useful data. For more information see the Using load_ard notebook.thresh_n_valid and thresh_freq.

dc, lat_range, lon_range, time_range and time_step as specified above. Other important optional parameters include: * combine_ls_s2: A boolean value deciding whether to include merged Landsat and Sentinel-2 products as one of the products to choose from. By default it is set to False so that only Landsat, Sentinel-2 or Sentinel-1 will be used. * coastal_masking: A boolean value deciding whether to calculate a simplified coastal zone mask

and restrict the comparison of the products within the masked area. Enabling this option would likely exclude pixels outside coastal zone (e.g. inland or deep ocean) that are not critical for coastline mapping, but require more time (e.g. several minutes) to process. By default this parameter is set to False to accelerate data query and loading. * set_resolution: A pre-set spatial resolution (e.g. 20) in metres to query all products. By default this parameter is not set, so the original

resolutions of the products will be used, i.e. 30 m for Landsat, 20 m for Sentinel-1 and 10 m for Sentinel-2.set_product: Set this to only query and load a pre-selected product, i.e. “ls” for Landsat,”s1” for Sentinel-1,”s2” for Sentinel-2, or “ls_s2” for merged/stacked Landsat and Sentinel-2 data. By setting this parameter no other products will be queried or compared.Note: Depending on parameter setting, the cell below may take more than 10 minutes to finish.

[7]:

%%time

ds_selected, product_name = load_best_available_ds(dc, lat_range, lon_range, time_range, time_step, set_product="s2")

No resolution pre-set, using default resolutions for individual products...

Pre-selected product: Sentinel-2

Using pixel quality parameters for Sentinel 2

Finding datasets

s2_l2a

Counting good quality pixels for each time step

/usr/local/lib/python3.10/dist-packages/rasterio/warp.py:344: NotGeoreferencedWarning: Dataset has no geotransform, gcps, or rpcs. The identity matrix will be returned.

_reproject(

Filtering to 251 out of 287 time steps with at least 20.0% good quality pixels

Applying morphological filters to pq mask [('opening', 2), ('dilation', 5)]

Applying pixel quality/cloud mask

Returning 251 time steps as a dask array

CPU times: user 34.1 s, sys: 2.73 s, total: 36.8 s

Wall time: 6min 16s

The function above will print out some useful information on parameters setting, progress of the processing and why the product is chosen based on the selection rules. Using the default parameters set in this notebook, you will find that Sentinel-2 is chosen as the best available product by the above function, because it has the higher mean number and frequency of valid observations compared to Landsat across the scene and within each time step. Moreover, Sentinel-2 and Landsat are preferred over Sentinel-1 due to the occasional challenges in distinguishing between land and water in SAR backscatter images. Smooth and flat beaches, in particular, can exhibit extremely low backscatter values, making differentiation from water pixels difficult.

If the date range is set to start before 2017, only Landsat data will be queried and loaded due to limited availability of Sentinel data.

Once the load is complete, you can examine the product name and data by printing it in the next cell. The Dimensions argument reveals the number of time steps in the data set, as well as the number of pixels in the x (longitude) and y (latitude) dimensions.

[8]:

print(product_name, "\n", ds_selected)

s2

<xarray.Dataset>

Dimensions: (time: 229, y: 890, x: 875)

Coordinates:

* time (time) datetime64[ns] 2018-01-22T07:22:04 ... 2019-12-23T07:...

* y (y) float64 -1.352e+06 -1.352e+06 ... -1.361e+06 -1.361e+06

* x (x) float64 3.571e+05 3.571e+05 ... 3.658e+05 3.658e+05

spatial_ref int32 32638

Data variables:

red (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

green (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

blue (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

swir_1 (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

Attributes:

crs: epsg:32638

grid_mapping: spatial_ref

Plot example timestamp¶

Here we can plot an example image at the selected location at a given timestamp. To visualise Landsat or Sentinel-2 data, use the pre-loaded rgb utility function to plot a true colour image for a given timestamp. White areas indicate where clouds or other invalid pixels in the image have been masked. If the selected product is Sentinel-1, we plot the vh band as an example.

[9]:

# Set the timesteps to visualise

timestamp = 4

if (product_name == "ls") or (product_name == "s2") or (product_name == "ls_s2"):

# Generate RGB plots at each timestep

rgb(ds_selected, index=timestamp, percentile_stretch=[0, 0.999])

else:

ds_selected["vh"].isel(time=timestamp).plot.imshow(robust=True)

Change the value for timestamp and re-run the cell to plot a different timestamp (timestamps are numbered from 0 to n_time - 1 where n_time is the total number of timestamps; see the time listing under the Dimensions category in the dataset print-out above).

Process selected data and generate annual composites¶

Model tide height¶

For each satellite timestep, we use the pixel_tides function to model tide heights into a low-resolution 5 x 5 km grid (matching resolution of the FES2014 tidal model), then reprojects modelled tides into the spatial extent of our satellite image. We add this new data as a new variable tide_m in our satellite dataset to allow each satellite pixel to be analysed and

filtered/masked based on the tide height at the exact moment of satellite image acquisition.

[10]:

ds_selected["tide_m"], tides_lowres = pixel_tides(ds_selected, resample=True)

Creating reduced resolution 5000 x 5000 metre tide modelling array

Modelling tides using FES2014 tide model

Reprojecting tides into original array

100%|██████████| 229/229 [00:04<00:00, 53.94it/s]

Based on the entire time-series of tide heights, we can compute the max and min satellite-observed tide height for each pixel, then calculate tide cutoffs used to restrict our data to satellite observations centred over mid-tide (0 m Above Mean Sea Level) using the tide_cutoffs function:

[11]:

# Determine tide cutoff

tide_cutoff_min, tide_cutoff_max = tide_cutoffs(ds_selected, tides_lowres, tide_centre=0.0)

With the tide cutoffs calculated for all pixels, we now only keep observations within the tide height cutoff ranges. We also want to drop time-steps with no pixels within the cutoff ranges:

[12]:

tide_bool = (ds_selected.tide_m >= tide_cutoff_min) & (ds_selected.tide_m <= tide_cutoff_max)

ds_selected = ds_selected.sel(time=tide_bool.sum(dim=["x", "y"]) > 0)

# Apply mask, and load in corresponding tide masked data

ds_selected = ds_selected.where(tide_bool)

print(ds_selected)

<xarray.Dataset>

Dimensions: (time: 119, y: 890, x: 875)

Coordinates:

* time (time) datetime64[ns] 2018-01-22T07:22:04 ... 2019-12-23T07:...

* y (y) float64 -1.352e+06 -1.352e+06 ... -1.361e+06 -1.361e+06

* x (x) float64 3.571e+05 3.571e+05 ... 3.658e+05 3.658e+05

spatial_ref int32 32638

Data variables:

red (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

green (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

blue (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

swir_1 (time, y, x) float32 dask.array<chunksize=(1, 890, 875), meta=np.ndarray>

tide_m (time, y, x) float32 -0.1365 -0.1364 ... -0.5846 -0.5846

Attributes:

crs: epsg:32638

grid_mapping: spatial_ref

Process selected data¶

To extract shoreline locations, we need to be able to separate water from land in our study area. To do this, for Landsat or Sentinel-2 we can calculate a water index called the Modified Normalised Difference Water Index, or MNDWI. This index uses the ratio of green and Shortwave-Infrared (SWIR) radiation to identify the presence of water (Xu 2006). The formula is:

where Green is the green band and SWIR is the SWIR band.

For Sentinel-1 data, we have implemented a few optional pre-processing steps. As radar observations appear speckly due to random interference of coherent signals from target scatters, we implemented speckle filtering using Lee filter, which is one of the popular adaptive speckle filters that takes into account local homogeneity.

Besides, each of the Sentinel-1 observations were acquired from either a descending or ascending orbit. The orbital direction impacts on the local incidence angle and backscatter value. Therefore, we implemented a per-pixel filtering to keep only observations from the dominant orbit, which is expected to minimise the effects of inconsistent looking angle and obit direction for each individual pixel. In addition, it is often useful to convert the backscatter to decible (dB) for analysis. Backscatter in dB unit has a more symmetric noise profile and less skewed value distribution for easier statistical evaluation.

These preprocessing steps are applied using function preprocess_s1 and the parameter filter_size and s1_orbit_filtering as specified at the begining of the notebook. Note that Sentinel-1 preprocessing may take a few minutes to finish.

[13]:

if product_name == "ls":

# Calculate the water index

ds_selected = calculate_indices(ds_selected, index="MNDWI", satellite_mission="ls")

elif (product_name == "s2") or (product_name == "ls_s2"):

# Calculate the water index

ds_selected = calculate_indices(ds_selected, index="MNDWI", satellite_mission="s2")

else:

ds_selected = preprocess_s1(ds_selected, filter_size=filter_size, s1_orbit_filtering=s1_orbit_filtering)

Combine observations into noise-free summary images¶

Individual remote sensing images can be affected by noisy data, e.g. cloud/cloud shadow for optical images and effects of wind on the water for radar images. To produce cleaner images that can be compared more easily across time, we can create “summary” images or composites that combine multiple images into one image to reveal the median or “typical” appearance of the landscape for a certain time period. In this case, we use the median as the summary statistic because it prevents strong outliers from skewing the data, which would not be the case if we were to use the mean.

In the code below, we take the time series of images and combine them into single images for each time_step. For example, if time_step = '1Y', the code will produce one new image for each year in the dataset. This step can take several minutes to load if the study area is large.

Note: We recommend opening the Dask processing window to view the different computations that are being executed; to do this, see the Dask dashboard in DE Africa section of the Dask notebook.

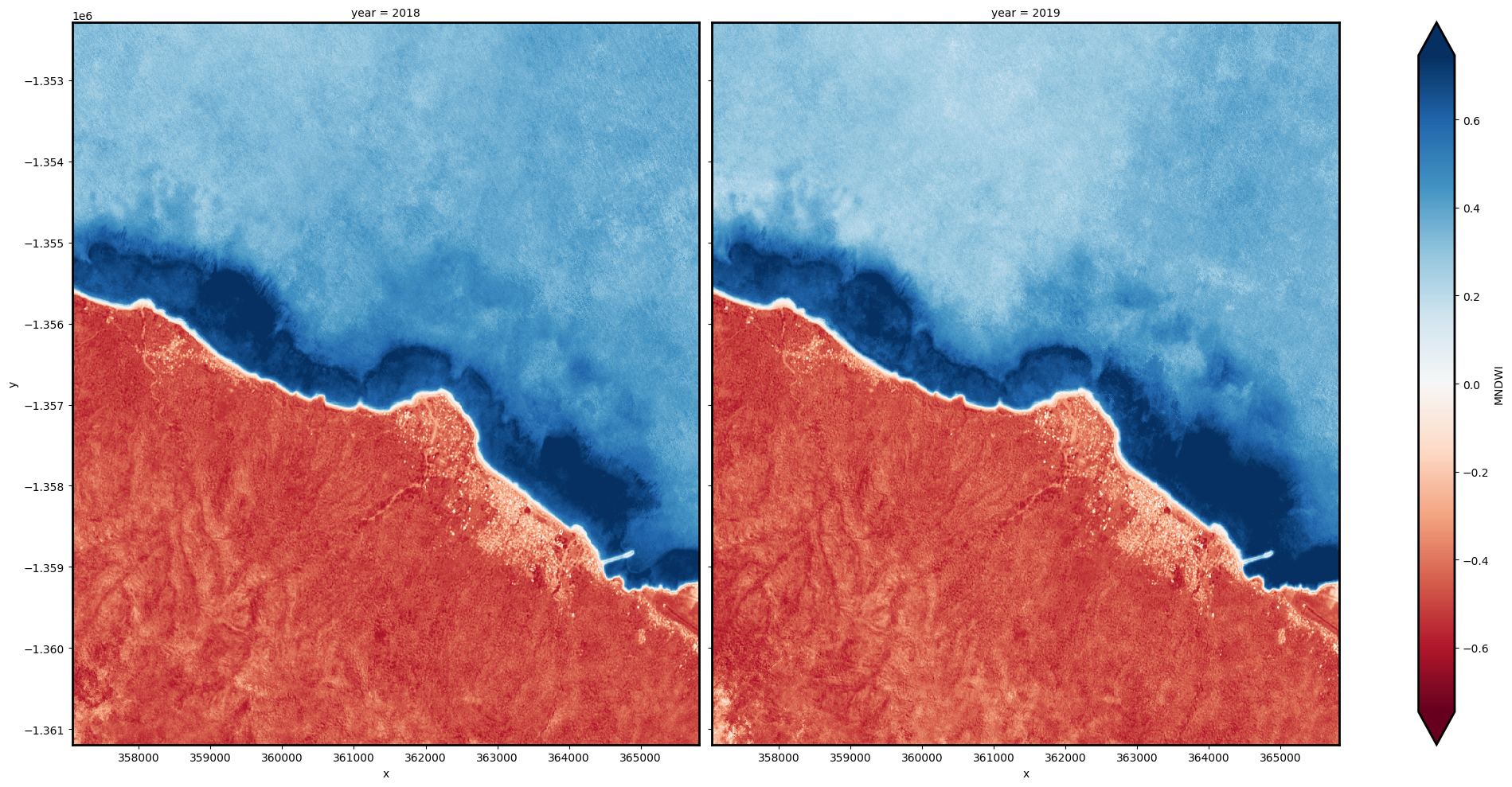

When it comes to interpreting the index, high values (blue colours) typically represent water pixels, while low values (red colours) represent land.

[14]:

# Combine into summary images by `time_step`

if (product_name == "ls") or (product_name == "s2") or (product_name == "ls_s2"):

var = "MNDWI"

else:

var = "vh"

ds_summaries = ds_selected[[var]].resample(time=time_step).median("time").compute()

# Rename time attribute as year

ds_summaries["time"] = ds_summaries.time.dt.year

ds_summaries = ds_summaries.rename(time="year")

# Plot the output summary images

ds_summaries[var].plot(col="year", cmap="RdBu", col_wrap=2, robust=True, size=10)

plt.show()

INFO:distributed.utils_perf:full garbage collection released 680.29 MiB from 282 reference cycles (threshold: 9.54 MiB)

[15]:

# Shut down Dask client now that we have processed the data we need

client.close()

INFO:distributed.nanny:Closing Nanny at 'tcp://127.0.0.1:37771'. Reason: nanny-close

INFO:distributed.nanny:Nanny asking worker to close. Reason: nanny-close

INFO:distributed.core:Received 'close-stream' from tcp://127.0.0.1:49354; closing.

INFO:distributed.scheduler:Remove worker <WorkerState 'tcp://127.0.0.1:42929', name: 0, status: closing, memory: 0, processing: 0>

INFO:distributed.core:Removing comms to tcp://127.0.0.1:42929

INFO:distributed.scheduler:Lost all workers

INFO:distributed.scheduler:Scheduler closing...

INFO:distributed.scheduler:Scheduler closing all comms

Extract shorelines and calculate rates of coastal change¶

Extract shorelines from imagery¶

We now want to extract an accurate shoreline for each of the summary images above. The code below identifies the boundary between land and water by tracing a line along pixels with a given threshold value. For Landsat and Sentinel-2 images, we use a water index value of 0.

For Sentinel-1 images, the threshold could be determined either through simple automatic thresholding, or using a more complicated supervised classification method. In this notebook we use the threshold_minimum function, which computes the histogram for all backscatter values, smooths it until there are only two maxima and find the minimum in between as the threshold.

We use the subpixel_contours function to identify the boundary between land and water by tracing a line along pixels with the previously identified threshold value. It returns a vector file with one line for each time step:

[16]:

if (product_name == "ls") or (product_name == "s2") or (product_name == "ls_s2"):

threshold = 0

else:

threshold = threshold_minimum(ds_selected[var].values[~np.isnan(ds_selected[var].values)])

contour_gdf = subpixel_contours(

da=ds_summaries[var],

z_values=threshold,

dim="year",

crs=ds_summaries.geobox.crs,

output_path="annual_shorelines_{}.geojson".format(product_name),

min_vertices=15,

)

contour_gdf = contour_gdf.set_index("year")

# Preview shoreline data

contour_gdf

Operating in single z-value, multiple arrays mode

Writing contours to annual_shorelines_s2.geojson

[16]:

| geometry | |

|---|---|

| year | |

| 2018 | MULTILINESTRING ((365815.000 -1359192.754, 365... |

| 2019 | MULTILINESTRING ((365815.000 -1359189.246, 365... |

Plot resampled shorelines on an interactive map¶

The next cell provides an interactive map with an overlay of the shorelines identified in the previous cell. Run it to view the map (this step can take several minutes to load if the study area is large).

Zoom in to the map below to explore the resulting set of shorelines. Older shorelines are coloured in black, and more recent shorelines in yellow. Hover over the lines to see the time period for each shoreline printed above the map. Using this data, we can easily identify areas of coastline or rivers that have changed significantly over time, or areas that have remained stable over the entire time period.

[17]:

# Plot shorelines on interactive map

bounds = np.arange(2018, 2022, 1)

contour_gdf.reset_index().explore(

column="year",

cmap="inferno",

categorical=True,

tiles="https://server.arcgisonline.com/ArcGIS/rest/services/World_Imagery/MapServer/tile/{z}/{y}/{x}",

attr="ESRI WorldImagery",

)

[17]:

Calculate rates of coastal change¶

To identify parts of the coastline that are changing rapidly, we can use our annual shoreline data to calculate rates of coastal change in metres per year. This can be particularly useful to reveal hotspots of coastal retreat (e.g. erosion), or hotspots of coastal growth.

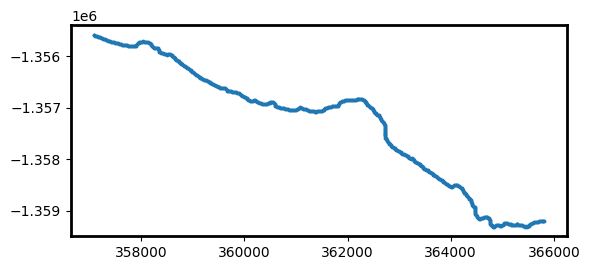

To do this, we first need to create a set of evenly spaced points at every 20 metres along the most recent shoreline in our dataset. These points will be used to plot rates of coastal change across our study area.

[18]:

# Extract points at every 30 metres along the most recent shoreline

points_gdf = points_on_line(contour_gdf, index=2019, distance=20)

points_gdf.plot(markersize=3)

[18]:

<Axes: >

Now that we have a set of modelling points, we can measure distances from each of the points to each annual shoreline. This gives us a table of distances, where negative values (e.g. -6.5) indicate that an annual shoreline was located inland of our points, and positive values (e.g. 2.3) indicate a shoreline was located towards the ocean. Because our points were created along our most recent 2021 shoreline, distances for 2021 will always have a distance of 0 m.

[19]:

# For each 30 m-spaced point, calculate the distance from

# the most recent 2021 shoreline to each other annual shoreline

# in the datasets.

points_gdf = annual_movements(

points_gdf, contours_gdf=contour_gdf, yearly_ds=ds_summaries, baseline_year=2019, water_index=var

)

points_gdf

[19]:

| geometry | dist_2018 | dist_2019 | angle_mean | angle_std | |

|---|---|---|---|---|---|

| 0 | POINT (365815.000 -1359189.246) | -3.51 | 0.0 | 0 | 0 |

| 1 | POINT (365796.826 -1359197.551) | -0.08 | 0.0 | 0 | 0 |

| 2 | POINT (365779.450 -1359193.014) | -2.37 | 0.0 | 172 | 8 |

| 3 | POINT (365760.749 -1359198.903) | -1.16 | 0.0 | 4 | 4 |

| 4 | POINT (365740.930 -1359197.280) | -1.15 | 0.0 | 175 | 5 |

| ... | ... | ... | ... | ... | ... |

| 551 | POINT (357151.802 -1355617.561) | -1.15 | 0.0 | 10 | 10 |

| 552 | POINT (357134.775 -1355607.871) | -3.12 | 0.0 | 10 | 10 |

| 553 | POINT (357115.352 -1355603.583) | -1.04 | 0.0 | 6 | 6 |

| 554 | POINT (357098.175 -1355593.421) | -3.92 | 0.0 | 13 | 13 |

| 555 | POINT (357079.532 -1355586.966) | -0.32 | 0.0 | 17 | 18 |

556 rows × 5 columns

Finally, we can calculate annual rates of coastal change (in metres per year) using linear regression. This will add several new columns to our table:

rate_time: Annual rates of change (in metres per year) calculated by linearly regressing annual shoreline distances against time (excluding outliers; seeoutl_time). Negative values indicate retreat and positive values indicate growth.sig_time: Significance (p-value) of the linear relationship between annual shoreline distances and time. Small values (e.g. p-value < 0.01 or 0.05) may indicate a coastline is undergoing consistent coastal change through time.se_time: Standard error (in metres) of the linear relationship between annual shoreline distances and time. This can be used to generate confidence intervals around the rate of change given by rate_time (e.g. 95% confidence interval =se_time * 1.96)outl_time: Individual annual shoreline are noisy estimators of coastline position that can be influenced by environmental conditions (e.g. clouds, breaking waves, sea spray) or modelling issues (e.g. poor tidal modelling results or limited clear satellite observations). To obtain reliable rates of change, outlier shorelines are excluded using a robust Median Absolute Deviation outlier detection algorithm, and recorded in this column.

[20]:

# Calculate rates of change using linear regression

points_gdf = calculate_regressions(points_gdf=points_gdf, contours_gdf=contour_gdf)

points_gdf

[20]:

| rate_time | sig_time | se_time | outl_time | dist_2018 | dist_2019 | angle_mean | angle_std | geometry | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 3.51 | 0.0 | 0.0 | -3.51 | 0.0 | 0 | 0 | POINT (365815.000 -1359189.246) | |

| 1 | 0.08 | 0.0 | 0.0 | -0.08 | 0.0 | 0 | 0 | POINT (365796.826 -1359197.551) | |

| 2 | 2.37 | 0.0 | 0.0 | -2.37 | 0.0 | 172 | 8 | POINT (365779.450 -1359193.014) | |

| 3 | 1.16 | 0.0 | 0.0 | -1.16 | 0.0 | 4 | 4 | POINT (365760.749 -1359198.903) | |

| 4 | 1.15 | 0.0 | 0.0 | -1.15 | 0.0 | 175 | 5 | POINT (365740.930 -1359197.280) | |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 551 | 1.15 | 0.0 | 0.0 | -1.15 | 0.0 | 10 | 10 | POINT (357151.802 -1355617.561) | |

| 552 | 3.12 | 0.0 | 0.0 | -3.12 | 0.0 | 10 | 10 | POINT (357134.775 -1355607.871) | |

| 553 | 1.04 | 0.0 | 0.0 | -1.04 | 0.0 | 6 | 6 | POINT (357115.352 -1355603.583) | |

| 554 | 3.92 | 0.0 | 0.0 | -3.92 | 0.0 | 13 | 13 | POINT (357098.175 -1355593.421) | |

| 555 | 0.32 | 0.0 | 0.0 | -0.32 | 0.0 | 17 | 18 | POINT (357079.532 -1355586.966) |

556 rows × 9 columns

Plot rates of coastal change on an interactive map¶

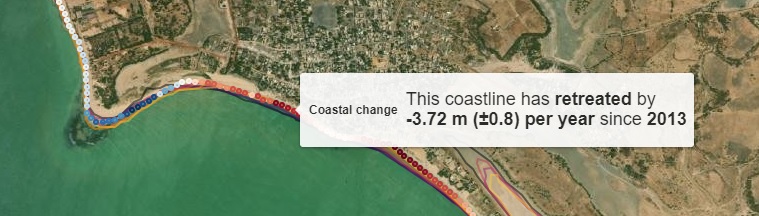

Now that we have calculated rates of coastal change, we can plot these on an interactive map to identify parts of the coastline that are retreating or growing over time.

When the map appears below, hover your mouse over the coloured dots that appear along the coastline for a summary of recent coastal change at those locations. Red dots represent locations that are retreating (e.g. erosion), and blue dots represent locations that are growing.

[21]:

# Add human-friendly label for plotting

points_gdf["Coastal change"] = points_gdf.apply(

lambda x: f'<h4>This coastline has {"<b>retreated</b>" if x.rate_time < 0 else "<b>grown</b>"} '

f"by</br><b>{x.rate_time:.2f} m (±{x.se_time:.1f}) per year</b> since "

f"<b>{contour_gdf.index[0]}</b></h4>",

axis=1,

)

points_gdf.loc[points_gdf.sig_time > 0.05, "Coastal change"] = f"<h4>No significant trend of retreat or growth)</h4>"

# Add annual shorelines to map

m = contour_gdf.reset_index().explore(

column="year",

cmap="inferno",

tiles="https://server.arcgisonline.com/ArcGIS/rest/services/World_Imagery/MapServer/tile/{z}/{y}/{x}",

tooltip=False,

style_kwds={"opacity": 0.5},

attr="ESRI WorldImagery",

categorical=True,

)

# Add rates of change to map

points_gdf.explore(m=m, column="rate_time", vmin=-5, vmax=5, tooltip="Coastal change", cmap="RdBu")

[21]:

Important note: This notebook may produce misleading rates of change for non-coastal waterbodies that might fluctuate naturally year-by-year. The full Digital Earth Africa Coastlines repository contains additional methods for producing more accurate rates of change by cleaning and filtering annual shoreline data to focus only on coastal shorelines.

Export rates of change to file¶

Finally, we can export our output rates of change file so that it can be loaded in GIS software (e.g. ESRI ArcGIS or QGIS).

[22]:

points_gdf.to_crs("EPSG:4326").to_file("rates_of_changes_{}.geojson".format(product_name))

Prochaines étapes¶

When you are done, return to the « Set up analysis » cell, modify some values (e.g. time_range, time_step or lat/lon) and rerun the analysis. If you’re going to change the location, you’ll need to make sure at least one of Landsat, Sentinel-2 and Sentinel-1 products is available for the new location, which you can check at the DE Africa Explorer.

For more information about the method behind this notebook, read the scientific paper: > Bishop-Taylor, R., Nanson, R., Sagar, S., Lymburner, L. (2021). Mapping Australia’s dynamic coastline at mean sea level using three decades of Landsat imagery. Remote Sensing of Environment 267, 112734. Available: https://doi.org/10.1016/j.rse.2021.112734

Additional information¶

License: The code in this notebook is licensed under the Apache License, Version 2.0. Digital Earth Africa data is licensed under the Creative Commons by Attribution 4.0 license.

Contact: If you need assistance, please post a question on the Open Data Cube Slack channel or on the GIS Stack Exchange using the open-data-cube tag (you can view previously asked questions here). If you would like to report an issue with this notebook, you can file one on

Github.

Compatible datacube version:

[23]:

print(datacube.__version__)

1.8.15

Last Tested:

[24]:

from datetime import datetime

datetime.today().strftime("%Y-%m-%d")

[24]:

'2023-11-07'